Introduction

There are lots of articles explaining what is important and what you should consider to securing your Kubernetes configurations, but I have not found that many guiding you through the steps of implementing these recommendations. And I am not talking about securing the code of the application (this is something that software engineers should be used to) or the containers (this is something for another time).

These recommendations are in the realm of:

- the services in the containers should not run as root

- the containers should not be privileged or be allowed to escalate privileges

- the container file system should be read-only

Introducing the KCCSS and kube-scan

As Kubernetes matures a common language and understanding of securing configurations is important and that is what the Kubernetes Common Configuration Scoring System (KCSS) tries to address, by suggesting a standard way to determine risky workloads due to configurations. The idea if the KCCSS is comparable the Common Vulnerability Scoring System (CVSS), the industry-standard for rating vulnerabilities.

Octarine has implemented kube-scan as a rules-based tool to determine the risk score of a workload and we will use kube-scan with its default rules-set throughout this exercise.

You should install kube-scan from its Github repo if you want to follow along (I have changed the KUBESCAN_REFRESH_STATE_INTERVAL_MINUTES to 1 to speed-up refreshes).

TLDR;

This article will take you step-by-step from an insecure (from the KCCSS point-of-view) configuration to a configuration that is as secure as possible.

Starting-point

This article will take a simple Kubernetes deployment based on Nginx and exposed by an ingress (if your ingress controller is not Traefik you may need to change the kubernetes.io/ingress.class).

Deploy

kubectl create ns kccss-start

kubectl -n kccss-start apply -f https://gist.githubusercontent.com/asksven/224a07ca5723c60f342429f3ce42e3d2/raw/083c79a6e7819c9f8c126a305e29915cf866d65c/deployment-start.yaml

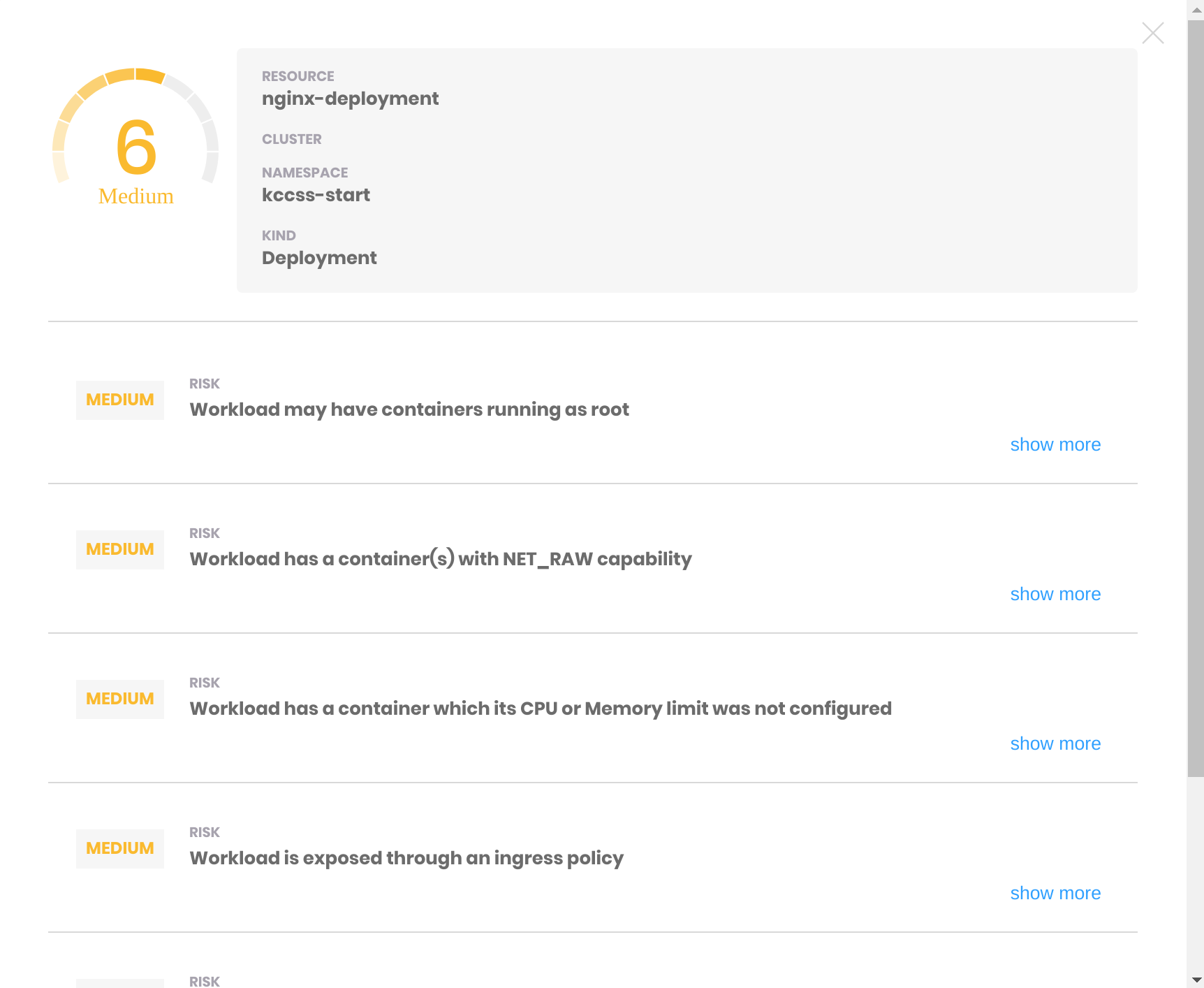

Result

The kube-scan result shows a KCSS score of 6 and lists the following issues:

- Workload may have containers running as root

- Workload has a container with NET_RAW capability

- Workload has a container which its CPU or Memory limit was not configured

- Workload is exposed through an ingress policy

- Workload allows privilege escalation

- Workload has a container(s) with writable file system

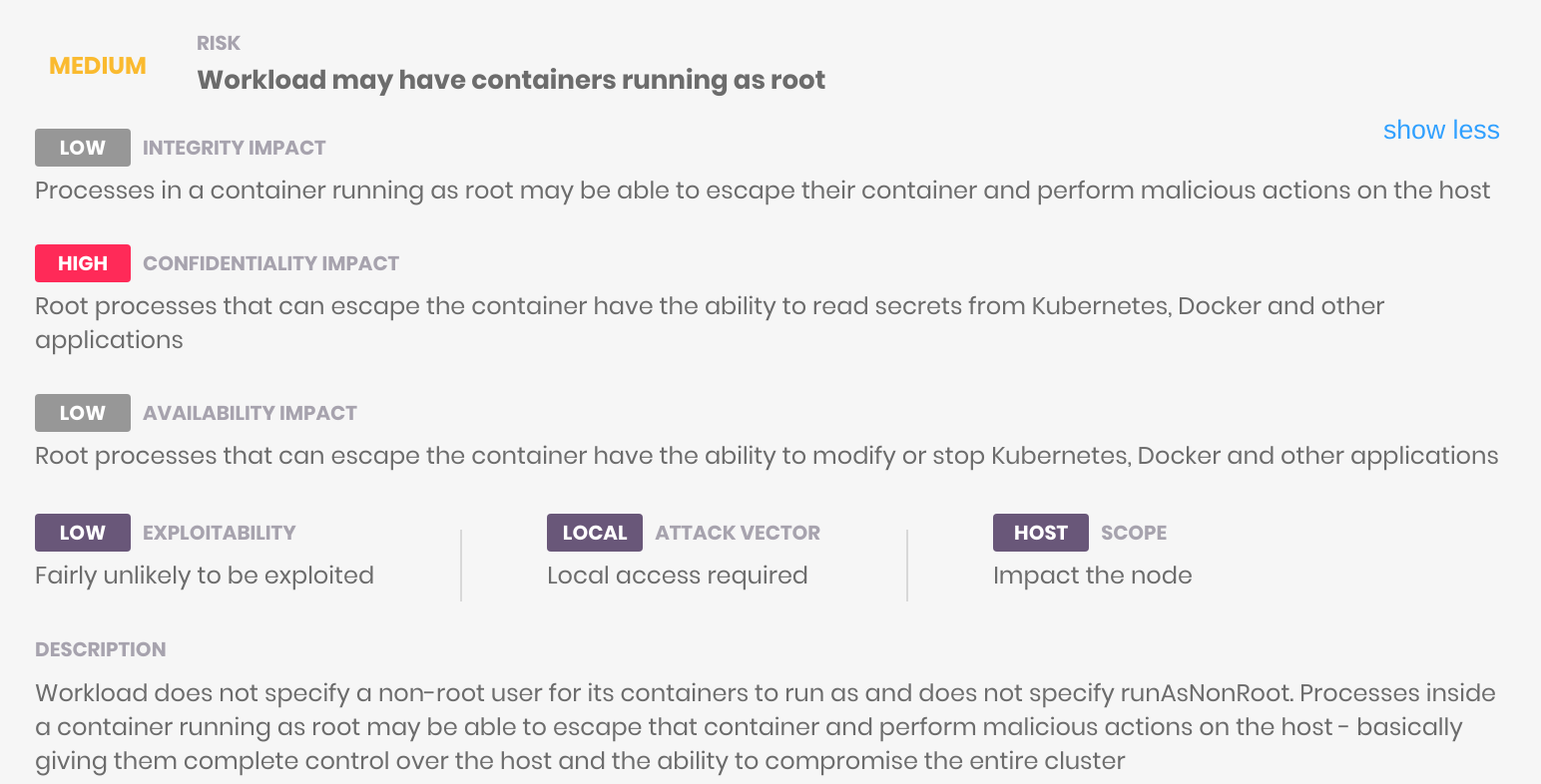

Looking into the details - e.g. of “Workload may have containers running as root” - you can find an explanation about the issue as well as hints on how to fix it:

How I got there

We will address the issues one-by-one, incrementally changing the manifest. The end-result shows the result.

Workload may have containers running as root

In order to fix this issue we add securityContext:runAsNonRoot: true to the deployment’s {spec.template.spec.containers}, as well as adding a securityContext to {spec.template.spec}:

spec:

securityContext:

runAsUser: 999

containers:

- image: nginx:latest

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 80

protocol: TCP

securityContext:

runAsNonRoot: true

Re-deploying causes an error:

nginx-deployment-6566d58467-86ns5 0/1 CrashLoopBackOff 6 10m

A closer look shows the problem:

[emerg] 1#1: mkdir() "/var/cache/nginx/client_temp" failed (13: Permission denied)

This shows that not all containers play well with having their permissions restricted. Fortunately, there is an alternative docker image that better suits our needs: nginxinc/nginx-unprivileged. Replacing nginx:latest with nginxinc/nginx-unprivileged in {spec.template.spec.containers.image} fixes the issue. Please also note that we nee to change the containerPort as well as the service’s targetPort to 8080.

This is how the deployment looks like after that first step:

spec:

securityContext:

runAsUser: 999

containers:

- image: nginxinc/nginx-unprivileged:latest

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 8080

protocol: TCP

securityContext:

runAsNonRoot: true

Workload has a container(s) with NET_RAW capability

In order to fix this issue we add securityContext:capabilities.drop: - NET_RAW to the deployment’s {spec.template.spec.containers}:

spec:

securityContext:

runAsUser: 999

containers:

- image: nginxinc/nginx-unprivileged:latest

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 8080

protocol: TCP

securityContext:

capabilities:

drop:

- NET_RAW

runAsNonRoot: true

Workload has a container which its CPU or Memory limit was not configured

In order to fix this issue we add resources.limits and resources.requests to the deployment’s {spec.template.spec.containers}. Pick requests and limits carefully, depending on the docker image and on the real load.

spec:

securityContext:

runAsUser: 999

containers:

- image: nginxinc/nginx-unprivileged:latest

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 8080

protocol: TCP

securityContext:

capabilities:

drop:

- NET_RAW

runAsNonRoot: true

resources:

limits:

cpu: "100m"

memory: "10Mi"

requests:

cpu: "80m"

memory: "4Mi"

Workload is exposed through an ingress policy

Well, that is funny because this is the whole purpose of having a webserver: there is nothing we can do about this issue. I think that is an area where kube-scan (tested on v20.1.1) could improve as networking is not black and white: network policies should be put in place to restrict the traffic to and from the workload to a minimum:

- deny inbound traffic not coming from the ingress controller

- deny outbound traffic completely as our workload does not need any

This collection of network policy receipes is a good starting point. When denying outbound traffic always keep in mind that DNS queries (to CoreDNS) must be taken into account.

For our namespace we will add two network policies:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: web-allow-ingress

spec:

podSelector:

matchLabels:

app: nginx

ingress:

- ports:

- port: 80

- from:

- namespaceSelector:

matchLabels:

purpose: ingress

---

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: default-deny-all-egress

spec:

policyTypes:

- Egress

podSelector: {}

egress: []

These have the following effects:

web-allow-ingressgrants inbound traffic coming from the namespace labeledpurpose=ingresson port 80 (my ingress controller sits in thetraefiknamespace and has been labeled accordingly:kubectl label namespace/traefik purpose=ingress)default-deny-all-egressdenies any outbound (egress) traffic

Workload allows privilege escalation

Not that we have our container running as non-root this issue can be resolved by adding allowPrivilegeEscalation: false to {spec.template.spec.containers}:

spec:

securityContext:

runAsUser: 999

containers:

- image: nginxinc/nginx-unprivileged:latest

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 8080

protocol: TCP

securityContext:

capabilities:

drop:

- NET_RAW

runAsNonRoot: true

allowPrivilegeEscalation: false

resources:

limits:

cpu: "100m"

memory: "10Mi"

requests:

cpu: "80m"

memory: "4Mi"

Workload has a container with writable file system

Not that we have our container running as non-root this issue can be resolved by adding readOnlyRootFilesystem: true to {spec.template.spec.containers}:

spec:

securityContext:

runAsUser: 999

containers:

- image: nginxinc/nginx-unprivileged:latest

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 8080

protocol: TCP

securityContext:

capabilities:

drop:

- NET_RAW

runAsNonRoot: true

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

resources:

limits:

cpu: "100m"

memory: "10Mi"

requests:

cpu: "80m"

memory: "4Mi"

Here again, re-deploying causes an error:

nginx-deployment-54bdd6796f-wlxkv 0/1 CrashLoopBackOff 4 2m6s

A closer look shows the problem:

[emerg] 1#1: mkdir() "/tmp/proxy_temp" failed (30: Read-only file system)

nginx: [emerg] mkdir() "/tmp/proxy_temp" failed (30: Read-only file system)

This is due to readOnlyRootFilesystem: true so to fix this we need a workaround. As Nginx needs to create directories in /tmp we need to make this writable. We don’t want to create persistent volumes for each pod so we will mount /tmp to emptyDir instead:

spec:

securityContext:

runAsUser: 999

containers:

- image: nginxinc/nginx-unprivileged:latest

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 8080

protocol: TCP

securityContext:

capabilities:

drop:

- NET_RAW

runAsNonRoot: true

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

resources:

limits:

cpu: "100m"

memory: "10Mi"

requests:

cpu: "80m"

memory: "4Mi"

volumeMounts:

- mountPath: /tmp

name: tmp

volumes:

- emptyDir: {}

name: tmp

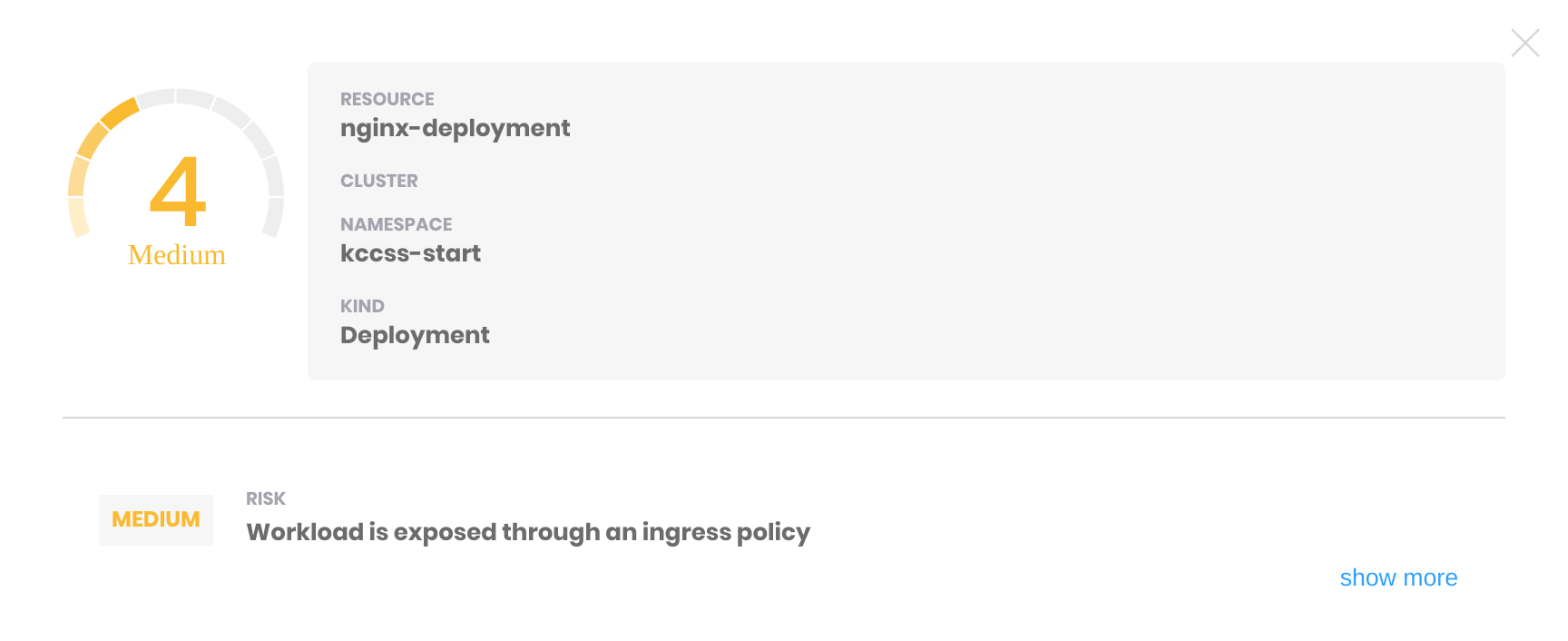

The final result

The final deployment can be found here.

The end result is a KCCSS of 4:

Conclusion

Securing Kubernetes configurations is not that simple, and all changes can not be applied to the manifests: if docker images do not play nice securing your configurations will require changes to your Dockerfiles as well.

The exercise is pretty tedious as well: imagine a more complex workload, with tens of containers where you would have to go through the same exercise. Pod security policies may help establish better standards and defaults in the future, even if their future seems uncertain. Projects like kyverno and Open Policy Agent’s Gatekeeper are alternatives and it seems that the community is leaning toward OPA.